Understanding Hidden Markov Models (HMM) in Java: a practical guide

Hidden Markov Models (HMMs). Just reading those three letters might spark a blend of curiosity and confusion. Over the years, working with developers and architects at byrodrigo.dev, I’ve watched that reaction shift into fascination—especially when we crack the code and start to see where these clever mathematical tools fit in our Java projects.

This guide doesn’t only demystify the core ideas, it travels through real examples, fresh code, and shows you how to make this classic algorithm work for you without getting stuck in theory forever. Ready? Let’s move from confusion to clarity, one step at a time.

What are hidden markov models and where are they used?

Let’s clear the fog first. A Hidden Markov Model is a statistical model that deals with systems which change over time and where you can’t see everything happening inside. Instead, you observe signals, results, or outputs, then use math to guess the secret state making those signals.

Maybe you’ve used autocomplete on your phone, heard your smart assistant recognize your accent, or even read DNA patterns in a lab. Everywhere you care about sequences—sounds, words, market prices, biological data—these models might be doing some of the heavy lifting.

- Voice recognition: Turning spoken words into text, even with background noise.

- Financial prediction: Guessing whether a stock’s going to rise, fall, or stay put, based on recent numbers.

- Bioinformatics: Reading hidden genetic markers from raw gene data.

- Natural language processing: Tagging words with their part of speech, or picking out names in sentences (entities).

While competitors like Number Analytics and BytePlus offer deep dives into the versatility of these probabilistic models and provide examples of their use across domains, our goal at byrodrigo.dev is much more practical: getting you from explanation to application, and seeing what actually works in code—fast.

A closer look at popular applications

Speech recognition: At its core, recognizing speech means mapping noisy audio signals to language. Here, you never get to “see” the actual words spoken—all you see are chunks of audio frequencies (the observable part), while the true words (the hidden states) are inferred by HMMs under the hood.

Speech recognition: At its core, recognizing speech means mapping noisy audio signals to language. Here, you never get to “see” the actual words spoken—all you see are chunks of audio frequencies (the observable part), while the true words (the hidden states) are inferred by HMMs under the hood.- Finance: Think of stock prices as observations, while the real “market moods” (bull or bear) are hidden from daily watchers. The model guesses unseen states from visible ups-and-downs.

- Bioinformatics: Genes or protein chains present observable segments. The goal? Infer the hidden structure—the original sequence or marker, vital for diagnosis or evolutionary study.

HMMs thrive where sequences matter and the full picture is never visible at once.

For teams using Java—a favorite at byrodrigo.dev—these problems are not just theory. They’re building blocks for apps and services impacting millions.

The simplified math under the hood

Before we run to the code, it helps to brush up, just a little, on how things work behind the curtain. I promise: no headaches. Let’s break it down with a story.

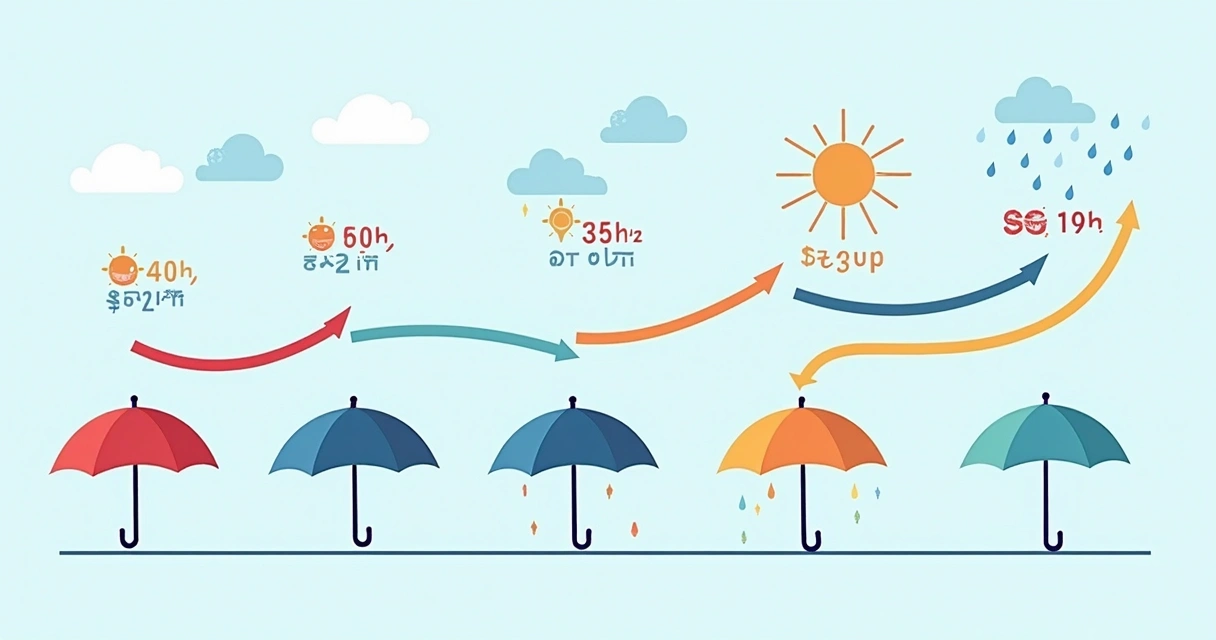

Imagine a weather forecaster

You want to guess if it’s rainy or sunny. But the twist? You’re locked inside, never looking out. Instead, you only see if someone carries an umbrella (your observable action). The “real” weather is hidden. Every day, you note two things:

- Is there an umbrella present or not?

- What you know about how rainy days tend to follow each other, and vice versa.

Over time, you build patterns—probabilities for rain continuing, for the umbrella showing up, and so on. That’s the heartbeat of an HMM.

Core components, in regular words

- Hidden states: The things you can’t observe (rainy, sunny).

- Observations: What you can see (umbrella or none).

- Transition probabilities: The odds you move from one hidden state to another—like rain today, sunshine tomorrow.

- Emission probabilities: Odds of seeing a particular observation in a given hidden state (how likely is it to carry an umbrella when it’s sunny?).

- Initial probabilities: The odds of starting in each possible hidden state.

I find it helps to look at the same idea with imagined numbers:

- If it’s rainy today, there’s a 70% chance it’ll be rainy tomorrow, and a 30% chance for sun.

- If it’s sunny, maybe a 60% chance it’ll stay sunny, 40% for it shifting to rain.

- On rainy days, umbrellas show up 80% of the time (the emission); on sunny, just 10%.

There it is. A statistical guessing game. And, surprisingly, these setups handle everything from language to DNA to prices without changing much at all.

A simple working example

Let’s flesh this out with a small example—maybe overly simple, but practical.

The umbrella problem

- Hidden states: Rainy, Sunny

- Observations: Umbrella, No umbrella

Your observation over five days: Umbrella, Umbrella, No umbrella, Umbrella, No umbrella.

What’s the most likely sequence of actual weather? That’s what the HMM will guess for you with an algorithm called Viterbi (which we’ll get to soon, promise).

We never see the weather, but we see its footprints.

Implementing HMM in Java—first steps

Now, as you might guess, plenty of libraries exist that offer HMM implementations (like Adrian Ulbona’s HMM abstractions, or the jahmm framework). Yet, at byrodrigo.dev, we care about understanding before abstraction. Let’s build the foundation by writing parts of an HMM from scratch in pure Java—then talk about trusted, advanced libraries you might use for deployment.

Data structures for the basics

For our umbrella-and-weather case, you might design it like this:

For our umbrella-and-weather case, you might design it like this:

- An array for the possible states: {Rainy, Sunny}

- An array for the observations: {Umbrella, No umbrella}

- 2D arrays for the transition and emission probabilities

- An array for initial state probabilities

Some code, simplified:

final String[] states = {"Rainy", "Sunny"};

final String[] observations = {"Umbrella", "No umbrella"};// Probabilities: rows are FROM, columns are TO

final double[][] transitionProb = { {0.7, 0.3}, {0.4, 0.6}};

final double[][] emissionProb = { {0.8, 0.2}, {0.1, 0.9}};

final double[] initialProb = {0.6, 0.4}; // 60% chance starts Rainy

Notice how natural the mapping sounds? Arrays and probabilities. No magic (well, almost none).

Building the viterbi algorithm in java

The Viterbi algorithm is a dynamic programming approach that, given your observations, tells you the most likely path of hidden states. If that sounds intimidating, don’t worry. In Java, it’s just a set of loops and tables.

Step by step through viterbi (with code)

- Step 1: Initialization— For the first observation, what is the probability of each hidden state producing that observation?

- Step 2: Recursion— For each next day, compute the best previous state, using transitions and emissions.

- Step 3: Termination and Backtracking— After running through all days, trace back to find the most likely path.

Viterbi turns ambiguous signals into the most reasonable explanation.

Basic Java code for initialization and recursion might look like:

int T = observationsSequence.length;

int N = states.length;

double[][] dp = new double[T][N];

int[][] backtrack = new int[T][N];

// Initialization

for (int i = 0; i < N; i++) {

dp[0][i] = initialProb[i] * emissionProb[i][observationIndex(observationsSequence[0])];

}

// Recursion

for (int t = 1; t < T; t++) {

for (int j = 0; j < N; j++) {

double maxProb = -1;

int prevState = -1;

for (int i = 0; i < N; i++) {

double prob = dp[t-1][i] * transitionProb[i][j] * emissionProb[j][observationIndex(observationsSequence[t])];

if (prob > maxProb) {

maxProb = prob;

prevState = i;

}

}

dp[t][j] = maxProb;

backtrack[t][j] = prevState;

}

}

Then, starting from the last time step, you trace back using the backtrack table for the path with the maximum probability. This part is mechanical—almost monotonous after the initial setup.

If it feels a bit rough in plain text, consider using a tested Java library like the HMM repository by 1202kbs. But, trust me, knowing what’s inside saves you pain the first time you debug a sequence gone wrong.

Pattern recognition: putting HMM to work

Let’s see our “umbrella” model in action for basic sequence prediction: After five observation days, what weather pattern does the HMM infer?

After running Viterbi on the observation “Umbrella, Umbrella, No umbrella, Umbrella, No umbrella”, the algorithm produces something like:

After running Viterbi on the observation “Umbrella, Umbrella, No umbrella, Umbrella, No umbrella”, the algorithm produces something like:

- Day 1: Rainy

- Day 2: Rainy

- Day 3: Sunny

- Day 4: Rainy

- Day 5: Sunny

The sequence guessed with HMM often aligns more closely with reality than guesswork ever could.

Those “guesses” are actually the end product of mathematical probabilities fitted to the world you care about—whether those are words, sounds, or market movements.

Your own real-world tweaks

Of course, not every business case will be so tidy. Maybe your observations span hundreds of possible outputs—or hidden states. HMMs, and particularly Java code, scale up to handle way more complexity if you let them.

- Try predicting user behavior by mapping web page clicks as observations.

- Classify text, like identifying sentiment, by mapping sequences of words and tags.

- Use for DNA, proteins, or other bioinformatics data—something deeply explored in real-world case studies from BytePlus.

If you want to combine Java applications with artificial intelligence approaches, you may also want to see byrodrigo.dev’s coverage of integrating AI models into Java Spring Boot or using Spring AI for pattern recognition tasks.

Advanced use and community libraries

Once you trust your understanding, there’s no need to reinvent the wheel—unless you love that sort of thing. Java has robust community libraries for Markov models, actively maintained, often used by both academics and industry professionals.

- jahmm is widely respected for its compatibility and built-in examples. It’s a smart jumping-off point if you want to scale up or handle more advanced HMM features, up to and including regression tests.

- the 1202kbs library supports multiple model types and applies design patterns that will make more advanced state management efficient and flexible.

Community-tested code means fewer surprises at 2 a.m.

That said, byrodrigo.dev continues to provide more insight, commentary, and practical Java code than other resources available online. We get our hands dirty so you can stay focused on building valuable solutions, not stumbling in documentation forests or half-finished Github repos.

Performance and smart trade-offs in java

If you build your application without thinking about sequence length or state count, results can grind to a halt. Even the best HMM implementation suffers when pushed to extremes in real, unbounded datasets. Here are lessons that our own teams (and so many byrodrigo.dev readers) have learned the sometimes hard way:

Keep track of state space size: As you increase possible hidden states or observation types, memory and speed costs climb rapidly. If your corpus grows, always revisit if your states or symbols could be merged or simplified.

Keep track of state space size: As you increase possible hidden states or observation types, memory and speed costs climb rapidly. If your corpus grows, always revisit if your states or symbols could be merged or simplified.- Use log probabilities: In long sequences, plain multiplication of probabilities will eventually underflow to zero. Storing log-probabilities (and working with sums instead of multiplications) counters this.

- Leverage existing, highly-optimized libraries: Many are available (as mentioned, jahmm and others), often using smart data structures, concurrency, or lazy evaluation. Roll your own only for learning or if you truly need a tailored solution.

- Test real-world data early: Models that behave well with toy problems can behave unpredictably in production. Keep your datasets as close to your use case as possible right from your earliest sprints. For guidance on getting from prototype to MVP with AI in Java, byrodrigo.dev’s article on developing AI models in Java is a good read.

Avoid premature scaling. Get one problem solved well, and expand from there.

Optimization tricks worth knowing

- Don’t recalculate what you already know—memoization or dynamic programming patterns cut computation time.

- Parallelize sequence analysis if your hardware and problem domain match. Java’s concurrency libs can help here, but only after the program works and is well profiled.

At byrodrigo.dev, we often see teams over-optimizing too early, causing confusion and wasted cycles. Start simple, measure, then tune.

When to use HMM and when to walk away

I’d love to say HMMs are the answer to all sequence problems, but that isn’t quite right. Some scenarios fit, and others… not so much.

Good cases for hmm

- Your data is clearly sequential—words in a sentence, sounds in a speech recording, time-ordered financial data.

- The system is assumed to be in one true (but hidden) state at any time.

- Observation likelihoods and transition odds are realistic to estimate.

- You value interpretability—HMMs output easy-to-understand probabilities and state paths.

When hmm might not work so well

- Sequences depend heavily on long-term or multi-step dependencies (for example, understanding whole paragraphs, not just individual sentence patterns).

- You face non-stationarity—where the odds change radically with new data, and the basic assumptions don’t hold.

- You have huge observation spaces with little data—HMMs can overfit or perform unevenly here.

- Sometimes, neural networks or transformers (found in state-of-the-art AI solutions) are a better match. For zero-shot prediction or more flexible AI without huge datasets, byrodrigo.dev offers guides to zero-shot AI models in Java.

HMMs aren’t for every problem—just the right ones.

Still, given the interpretability, speed, and friendliness to classic Java, HMMs remain a smart first step for many sequence modeling tasks, even in a complex AI world.

Conclusion: the road from theory to java reality

So there it is: from umbrellas and weather to DNA, speech, and high-frequency trading, Hidden Markov Models continue to make a difference wherever the past hints at the present, and the present hints at what’s coming next.

If you’re a developer or architect eager to build the next great AI service, or a manager searching for solutions that balance flexibility with simplicity, understanding HMMs opens a window of opportunity. Start simple, test fast, and bring models from the whiteboard to Java, just as we do at byrodrigo.dev.

Your know-how is the start, but your experiments make it real.

Want to go further? Sign up with byrodrigo.dev’s newsletter, or get in touch if you’re looking to push the boundaries of Java, AI, and software architecture the way only practitioners and hands-on problem-solvers can. Let’s build real solutions to real problems—one sequence at a time.