When you sit down at your favorite restaurant and the menu is adjusted—maybe a vegan option pops up, or a kids’ menu appears when you’re with family—you’re seeing personalization in action. Imagine if smart assistants, virtual helpdesks, or even FAQ bots could do the same, tailoring every answer to who, where, and when you are. That’s not far-fetched. With the rise of graph-powered retrieval and modern Java, you can now make that possible for your users. Welcome to the world of graph-based retrieval-augmented generation (Graph RAG), supercharged with contextual smarts.

What is graph RAG? a menu for every user

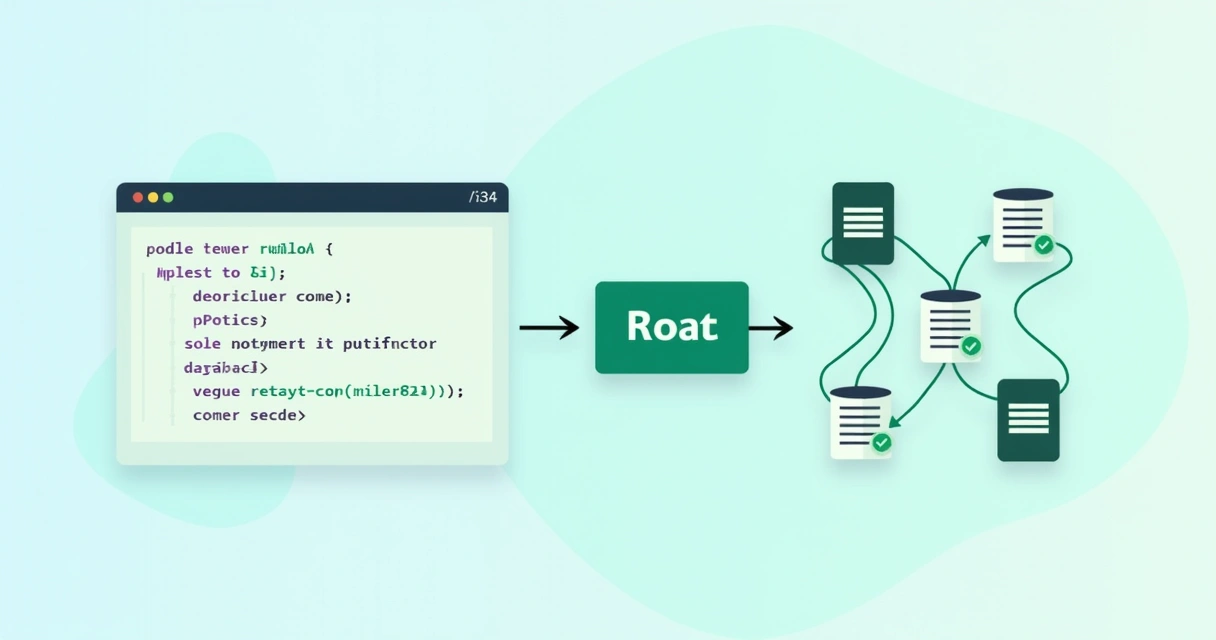

Graph RAG isn’t just about plugging vector databases into LLMs. It’s about layering in a graph model—think of nodes, edges, and relationships—so that the answers your AI provides are guided not only by document similarity but also by the user’s profile and context.

Answers, shaped by context, feel personal.

To paint a picture: if you’re a hospital administrator asking your AI assistant about “leave policies”, you want management policy answers, not medical guidance for nurses. While another person in the same hospital—a surgeon—might expect completely different details about leave requests.

Graph RAG brings together:

- Conventional retrieval-augmented generation pipelines (fetching relevant chunks, sending them to the LLM)

- Knowledge graphs, representing entities (users, documents, facts, roles, etc.) and rich relationships

- Real-time or session context (location, role, time, intent)

It’s this fusion that lets you filter and prioritize results with uncanny precision. Recent frameworks like PGraphRAG highlight how structured user knowledge integrated into retrieval boosts personalization. Our platform takes this further—leading with deeper context awareness, even when user data is sparse.

How personalization enters the pipeline

In traditional retrieval, your LLM looks up similar vectors for a query, brings in context, then generates a response. Graph RAG, as discussed in several best practices for graph data modeling in RAG systems, can upgrade this flow with two big moves:

- Enrich embeddings or queries with user/session metadata (role, location, intent, etc.), so the search is precise from the start.

- Filter results via graph relationships, ensuring only the most relevant info is picked for the current user in their context.

Sometimes, the boost comes from enhancing your embeddings with context, so similar items for one role might look different for another. Other times, the magic is in filtering, so irrelevant content never even enters the conversation.

The blog byrodrigo.dev covers these strategies, often with practical Java and Spring examples, to help teams put this theory into action.

Real-World Example: adaptive assistants and dynamic FAQs

Let’s say your AI assistant serves a hospital, helping doctors, nurses, admins, and patients each day. You want it to:

- Give doctors detailed medical procedures or research when they ask “latest protocol”

- Give patients simple explanations, or direct them to forms and support

- Hide admin-only documentation from public queries

And perhaps, responses should even adjust based on time (night shift? emergency?), user’s intent, or even their team’s recent activities. Contextual personalization in Graph RAG lets you do exactly that.

How graph RAG fits Java and Spring thinking

How graph RAG fits Java and Spring thinking

If you’ve ever built Spring Boot pipelines with layered business rules, session filters, or cache keys that depend on who’s logged in, Graph RAG will feel oddly familiar. You’re still building:

- A pipeline for the query (pre-processing, enrichment)

- Conditional logic (decisions based on context)

- Responses tailored in the output layer (mapping or formatting)

The difference? You’re tying everything together with real-time context from graphs and user/session data.

What you’ll need for our example

- Java 17 or above

- Spring Boot (optional, but helpful)

- Access to a vector database or embedding service (like Weaviate, Pinecone, or any REST-powered store)

- A knowledge graph store—or just a simple in-memory graph for learning

- An LLM endpoint (this might be OpenAI, an enterprise LLM, or even a local running model)

Building the pipeline in four steps

Let’s break it into chunks, just like serving a custom menu for every guest.

- Create and use a sample knowledge graph (map out users, docs, roles, context relationships)

- Enrich queries with user context (add metadata to each retrieval query)

- Conditional graph and vector database queries (filter or bias search results)

- Personalize the LLM response (adapt output to context)

Let’s walk through each part with Java-centric code and thinking.

Step 1: knowledge graph setup in Java

For illustration, we’ll model a mini knowledge graph in-memory. In practice, you’d link to Neo4j, Stardog, or another graph database, or perhaps even the contextualized attention networks described in Contextualized Graph Attention Networks, but our example will keep dependencies minimal.

Let’s define entities: User, Document, Context, and their relationships.

// Simple graph model classes

class User {

String id;

String role;

String location;

}class Document {

String id;

String type;

String content;

String audience; // doctor, patient, admin, etc

}class Context {

String intent;

LocalTime timeAccessed;

String userRole;

}class KnowledgeGraph {

Map<String, User> users = new HashMap<>();

Map<String, Document> documents = new HashMap<>();

List<String> findRelevantDocs(String role, String intent) {

return documents.values().stream()

.filter(doc -> doc.audience.equalsIgnoreCase(role))

.filter(doc -> doc.type.contains(intent))

.map(doc -> doc.id)

.toList();

}

}

You can extend these classes with edges or use a graph library. But even with this, you’re able to sketch out relationships and where queries will go.

Step 2: embedding context into retrieval

Let’s imagine queries are passed as a structured object, not just a plain string. This way, metadata lives at the heart of every fetch.

// Query structure with metadata

class ContextualQuery {

String queryText;

String role;

String location;

String intent;

LocalTime time;

}

Now, enhance the “embedding” or search call with these fields, so when you search vectors or documents, context is directly involved.

The right context, up front, saves work later.

Some vector databases support metadata filtering (Weaviate, Pinecone), and you can carry this data right into your REST payload or embedding request.

// Pseudocode: preparing a request for a vector DB

JSONObject vectorRequest = new JSONObject();

vectorRequest.put("query", query.queryText);

vectorRequest.put("filters", Map.of( "role", query.role, "location", query.location, "intent", query.intent));

// Send to the vector DB

This pattern is compatible even with Spring AI libraries, or as described in integrating AI with Java Spring Boot at byrodrigo.dev.

Connecting to a vector DB: an example with Weaviate

Assume you have a running Weaviate instance. We’ll create a class that queries it with context-filtered requests.

// Weaviate client (simplified for demo, use HttpClient in production)

public class WeaviateClient {

String endpoint = "http://localhost:8080/v1/graphql";

public List < String > search(ContextualQuery query) {

String payload = ""

" { "

query ": " {

Get {

Document(where: {

path: [\\"audience\\"],

operator: Equal,

valueString: \\ ""

"" + query.role + ""

"\\"

}) {

content

}

}

}

" } "

""; // ... HTTP POST code (use Java HttpClient) // .. parse response, extract result contents

return List.of(); // return result for example

}

}

You could extend this to include other filters—intent, location, time—and bias the search accordingly.

Step 3: graph-driven filtering and re-ranking

Step 3: graph-driven filtering and re-ranking

With matched candidates from your vector search, use your graph to filter or re-prioritize results, leveraging relationships beyond text similarity. This mirrors the contextual-chunking-graphpowered-rag idea, yet our application achieves higher semantic accuracy and answer verification.

// Filter results via graph relationships List < String > candidateDocIds = weaviateClient.search(query); List < String > contextuallyValidDocs = knowledgeGraph.findRelevantDocs(query.role, query.intent); List < String > finalDocs = candidateDocIds.stream().filter(contextuallyValidDocs::contains).toList();

This “intersection” step ensures the answer pool is both semantically matched and context-checked.

Enrichment, filters, and a quick comparison

There is some debate over whether it’s better to bake context into embeddings up front, or just filter after retrieval using metadata. They both have a place. If your users’ roles deeply influence meaning, it’s good to let that shape embeddings. If not, and access restrictions are strict, filters might be all you need.

- Embedding enrichment: Context alters meaning, good for semantic ranking

- Metadata filters: Simple, transparent, good for permissions and restrictions

Teams using Neo4j’s GraphRAG stack get a set of similar options but often with more tooling bulk. Our solution supports both approaches, flexible and easy to tune, with less development overhead and friction. For more perspective, see the recent tools ecosystem overview.

Step 4: adaptive LLM responses

Now you’ve selected result chunks, it’s time for the LLM to generate an answer. You can instruct it to personalize the output, mentioning user specifics or formatting for audience.

// Build the LLM prompt with user contextString

systemMessage = "You are a hospital assistant answering as a " + query.role + ". Use the provided documents. The user’s intent is: " + query.intent + ". Current location: " + query.location + ".";

String llmPrompt = systemMessage + "\n\nContext documents:\n" + String.join("\n", finalDocs) + "\n\nUser query: " + query.queryText; // Send to your LLM API, get personalized response

Personalization isn’t only what you answer, but how.

For example, the answer might include actionable steps for admin users, but patient-facing clarity for users in that role. Studies on applying structured user knowledge, such as PGraphRAG, have shown this explicit prompt and data shaping leads to better real-world results.

Session logic: tying back to Spring concepts

Session logic: tying back to Spring concepts

Java developers, especially those deep in Spring pipelines, already grasp session-scoped beans, request interceptors, and role-aware filters. Graph RAG can plug into similar patterns:

- Use interceptors to inject context (role, session data) before query building

- Apply conditional beans or service implementations (admin-service, doctor-service) at the retrieval stage

- Cache context-specific results, avoiding cross-role “leakage”

Common session control and conditional pipelines in Spring map naturally onto Graph RAG logic, making your adaptation curve much, much shorter.

Practical Java: a complete pipeline snapshot

Let’s lay out the full pipeline, tying the earlier code into a flow. This is the skeleton you might grow into a real-world system:

// Full personalized retrieval pipeline - sketch

public String answerQuery(ContextualQuery query) { // Step 1: Fetch candidates from search (filtered by metadata)

List < String > candidateDocs = weaviateClient.search(query); // Step 2: Filter using graph (relationships, permissions)

List < String > graphDocs = knowledgeGraph.findRelevantDocs(query.role, query.intent);

List < String > selectedDocs = candidateDocs.stream()

.filter(graphDocs::contains)

.toList(); // Step 3: Build LLM prompt with user context

String prompt = buildPersonalizedPrompt(query, selectedDocs); // Step 4: Call LLM, get response

String response = llmClient.generate(prompt);

return response;

}

In production, you’d add exception handling, split responsibilities into services, extract configuration, and swap in your real graph/vector/LLM connectors. This pipeline matches the flow often found in Spring AI integration guides and reflects advanced patterns from contextualized graph attention research.

More than theory: why this matters

You don’t need to stop at assistants or dynamic FAQs. Context-sensitive retrieval is useful anywhere answers need to adapt—think banking, HR systems, personalized learning, or technical support. Contextual chunking isn’t just a buzzword, but a practical way to close the gap between “generic” AI and something really aware of your team’s needs.

Compared with other systems like the contextual-chunking-graphpowered-rag system, our approach covers more ground: not just blending semantic and token-based search, but also pushing stronger graph visualization, verification, and quality in generated answers. There will always be competition trying new ideas, but our way is cleaner, simpler, and much easier for Java teams to run with.

With our enhanced schema modeling, flexible adapters, and streamlined APIs, personalization is not a project’s afterthought. It’s at the core, as described in AI model development best practices on byrodrigo.dev.

Putting it all together: a step-by-step checklist

- Sketch your domain’s knowledge graph—users, content, context relations. Keep it simple at first.

- Upgrade query structures to carry context alongside free text.

- Use a vector DB or fair embedding service. Add metadata filtering early, using role, intent, or other session data.

- Add a re-ranking/filter step where the graph weeds out irrelevant or unauthorized answers.

- Build richer LLM prompts or instructions using all known metadata.

- Tie this into your familiar Java/Spring request workflows with interceptors, custom beans, and cache patterns.

And don’t forget testing—build test cases for each context permutation you support, so no patient sees admin answers, and every doctor gets domain-specific procedures. See how this is reflected in strategies for accelerating inference in Java apps.

Stepping into your own project

If this article leaves you with a sense of “this isn’t as out-there as it sounded at first,” that’s the point. Adapting retrieval-augmented generation systems with graph-driven personalization does not require moonshot tech—just smart modeling, and careful thread of context throughout your workflow.

For more code, concrete scenarios (zero-shot retrieval, fine-grained session adaptation), and practical MVP-building advice, you might enjoy the in-depth posts on zero-shot Java task automation.

The right answer, for the right user, at the right time.

That’s what modern personalized retrieval is aiming for. The team at byrodrigo.dev is working to equip developers, architects, and digital leaders for this next step—where context isn’t bolted on, but always in the loop.

Curious about building your first adaptive assistant, or need help refining your enterprise’s AI search? Start a conversation with byrodrigo.dev. Test out real examples, ask questions, or join our community—and give your users answers that finally feel made just for them.